From Proof-of-Concept to Real-World Impact: How to Successfully Deploy Machine Learning Models

Machine learning (ML) has revolutionized industries by unlocking the potential to automate tasks, generate new insights from data, and create entirely new product experiences. Yet, many practitioners and aspiring ML engineers discover a significant gap between training a great model in a lab or a Jupyter Notebook and actually running that model in production to solve real business problems.

In this article, we’ll explore the machine learning project lifecycle—from choosing what to build, to managing data effectively, to finally deploying a model in a stable, scalable way. Along the journey, we’ll highlight real-world examples (such as defect detection in manufacturing and speech recognition) that reveal how to think beyond pure model accuracy and focus on building reliable systems that provide true value.

1. Why Production-Ready ML Matters

Training a model to high accuracy on a holdout set is a genuine achievement, but it’s only the start of your ML journey. Once you venture beyond the lab environment, you’ll face issues like data shifts (also known as concept drift), new user behaviors, edge deployment constraints, and the large body of code that surrounds your model.

In fact, if you imagine drawing a box to represent your ML algorithm, you’ll typically find it occupies just a small fraction of the overall production system. The remaining infrastructure—data pipelines, monitoring tools, APIs, and user interfaces—usually takes far more time to build, refine, and maintain. Moreover, employers often ask not just “How do you train a model?” but “How do you deploy and monitor it?” Gaining these practical deployment skills significantly boosts your capability as an ML engineer or data scientist.

2. The ML Project Lifecycle

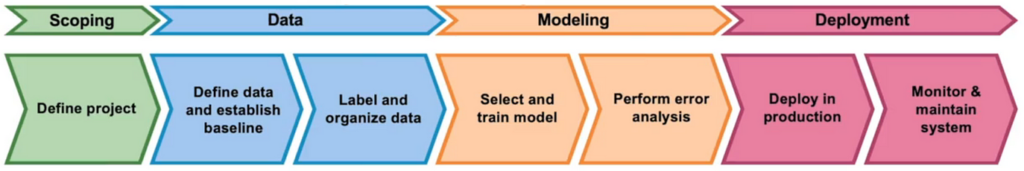

To tackle the challenge of going from a promising idea to a functioning, value-generating ML system, it helps to visualize a clear lifecycle:

- Scoping

Decide on the problem you want to solve and define the inputs (X) and outputs (Y) for your model. Establish metrics (accuracy, latency, throughput, etc.) and estimate resources such as time, budget, and computing requirements. - Data

Acquire or collect the data you need—often a much bigger task than it appears. You must define consistent labeling standards, ensure label quality, and organize everything in a way that supports easy access, updates, and versioning. - Modeling

Select and train your model. This step involves choosing the right algorithm or architecture, tuning hyperparameters, and iterating based on error analysis. Critically, you don’t just fix the model; you might need to revisit the data itself, collecting additional examples that address specific error modes. - Deployment

Integrate the model into a prediction server (whether on the edge or in the cloud), build user-facing and backend services (APIs, GUIs), and monitor performance to catch any data drift over time. It’s often here—when real data streams in—that you uncover the second half of your lessons, prompting further refinements.

While the diagram might appear linear, real-world ML projects are iterative. You frequently loop back from deployment to error analysis and data collection to keep the system relevant and robust over time.

3. Real-World Example: Automated Visual Defect Inspection

A classic illustration of this lifecycle in action is a smartphone manufacturing line aiming to detect scratches and other defects using computer vision. After you train a robust computer vision model (e.g., a neural network) in a notebook, you need to decide where and how to deploy it:

- Edge Deployment: An “edge device” in the factory can run the model locally, capturing images from a camera, sending them to a local prediction service, and making real-time decisions about each phone. This approach is typically preferred for low-latency, high-reliability environments where internet connectivity is not guaranteed.

- Cloud Deployment: Alternatively, images can be sent to a remote prediction server. This can simplify model updates but requires stable network access.

Data drift often rears its head when conditions on the factory floor (like lighting) differ from your training environment, causing performance to degrade. While some might say “That’s not a machine learning problem,” you’ll often need to update the data or re-engineer the environment to ensure high-quality images. Understanding that your responsibility extends beyond the model is key to delivering a system that truly works.

4. Real-World Example: Speech Recognition

Another powerful example is speech recognition. Widely used in smartphones and virtual assistants, modern speech recognition systems rely heavily on deep learning to transcribe audio to text accurately.

- Scoping: You’ll set goals around accuracy, latency (how quickly you get a result), and throughput (how many queries you handle per second). You might plan to serve voice search requests, so response speed is crucial.

- Data Management: Getting consistent transcriptions is surprisingly tricky. For instance, if one transcriber types “Um today’s weather” while another omits “Um” entirely, the model may struggle with inconsistent labeling. Clearly defined labeling guidelines ensure your dataset is cohesive and supports higher accuracy.

- Modeling: In many research contexts, the dataset is “fixed,” and the focus lies on refining model architectures. In production, you have flexibility to modify and improve both the data and the model. Often, collecting targeted data for underrepresented demographics (e.g., children’s voices) or specific noisy conditions can yield faster improvements than repeatedly tweaking network architectures.

- Deployment: A typical deployment pipeline might include Voice Activity Detection (VAD) on the phone to trim silent segments, sending only relevant audio to a cloud-based server for transcription. The server returns text and (for voice search) relevant results. Monitoring remains essential; if you find a rising number of incorrectly transcribed queries from a new demographic group, you’ll need to collect more data and retrain the model to handle that group effectively.

5. Data-Centric vs. Model-Centric Approaches

In academic and research settings, model-centric improvements—trying every new neural architecture—are common. However, in production-focused teams, adopting a data-centric approach (ensuring data consistency, targeting specific error modes, refining labeling guidelines) often leads to bigger gains with less effort. It’s often less expensive and faster to collect or clean a strategically chosen dataset than it is to develop entirely new algorithmic solutions.

6. The Role of MLOps

Successfully managing these steps—especially “last-mile” tasks like setting up data pipelines, monitoring tools, continuous integration, and automated re-training processes—has led to the emergence of MLOps. MLOps combines the best practices of software engineering (like DevOps) with the unique needs of ML systems. Tools like LandingLens (for computer vision) or other platforms facilitate streamlined processes from scoping to data management, modeling, and deployment.

7. Putting It All Together

Deploying ML in production is an iterative and collaborative journey. By following a structured lifecycle—scoping your problem, ensuring high-quality data, methodically training and improving your model, then deploying and monitoring it—you equip yourself to handle the unexpected twists that inevitably arise when your system meets real-world data and user behaviors.

Key Takeaways

- The project lifecycle (scoping → data → modeling → deployment) helps avoid surprises and gives structure to your work.

- Data drift is inevitable, so plan to monitor, retrain, and adjust your system when the real-world data changes.

- Data-centric approaches can often accelerate progress faster than only tweaking architectures.

- Moving from proof-of-concept (POC) to production requires careful design of all supporting systems—not just the model.

By understanding these realities, you’ll be better prepared to take your machine learning skills from the lab into real products and platforms that make a tangible difference.

Further Reading

- Hidden Technical Debt in Machine Learning Systems by D. Sculley et al., for insights on the complexity of maintaining ML systems in production.

- Andrew Ng’s ML in Production Specialization on Coursera, which covers these topics in depth and provides hands-on experience.

- LandingLens by Landing AI, which showcases how an end-to-end MLOps platform can streamline data management, modeling, and deployment for computer vision projects.